On glassdoor again today, and saved a job from Therapy Brands that I found helpful, given current hot market requirements.

Here is what stuck out to me in the listing from glassdoor.com

DevOps/Site Reliability Engineer

"This candidate will need to have a fervor for empowering support, development and product teams to help set the company up for success!"

- Responsibilities

- Be part of software architectural discussions that will influence site reliability

- Be able to effectively communicate with engineering team, product management team, customer support team, as well as business leaders

- Implement centralized logging

- Build monitoring systems that support large SaaS products

- Build efficient and secure cloud infrastructure

- Create and build deployment processes that scale

- Improve site performance amidst growing user bases.

- Keep cloud infrastructure costs in check.

- Requirements

- 5+ years of experience in a DevOps, System Admin and/or Development role

- Good Linux skills

- Familiarity for immutable infrastructure.

- Familiarity with tools like Terraform, CloudFormation

- Experience with building CI/CD pipelines

- Experience with database tuning

- Experience working with cloud platforms (AWS, Azure)

-

Passionate about source controlling everything

- Desirable

- Experience building applications in the healthcare space

- Experience/familiarity with test automation frameworks

- Experience with docker toolset: (kubernetes, ECS)

- Traits

- Problem solver

- Self-starter

- Collaborator

- Detail oriented

- Diligent

- Proactive

We’re serious about team member well-being. That’s why we are proud to offer the following employee benefits including but not limited to:

- Unlimited paid time off for eligible employees

- Generous Maternity and Parental Leave Plans

- 11 paid Company Holidays

- Comprehensive medical, dental and vision insurance available on day one

- 401K Safe Harbor plan with company match and 100% vesting on day one

- Meeting-Free Fridays

- Quarterly paid Community Service day

There is a lot there. I was tempted to remove part's of this, but in case they remove the listing by the time you are reading this I wanted you to see the whole post. I've highlighted the items I will cover in this blog. The rest will be in a subsequent post(s).

Prerequisites

- GitHub CLI (Not mandatory, but I will be using it because it's neat).

- Github Account

- kubectl & minikube

- AWS account (this complies with free tier)

- Terraform

This tutorial also assumes you have a similar basic setup to the button click env tutorial, specifically IAM role setup.

What is Immutable Infrastructure?

If you have been in systems, infra, or operations for any amount of time, you have probably run into the following. You have a server. You tend to that server regularly. Make patches, clear local space, etc...It seems pretty second nature to most of us. But what you may or may not understand is the amount of cost, instability, and late night efforts it takes to maintain a server like that. We want our infra to be easy to scale, secure, and cost efficient.

In this tutorial, we will be capturing the essence of immutable infra with Terraform and Docker.

Before we begin; a note about cost management in AWS.

I do my best to keep these tutorials within the AWS free tier limits. This tutorial is no different. But remember, you need to make sure you are managing your own billing portal. Scottyfullstack is not responsible for exceeding limits in AWS. Check out the billing dashboard to set budgets and alerts.

Now, for this project we want to capture the essence of immutable infra with Terraform, kubernetes, RDS, GitHub, and Docker. Unlike in Button Click Environment, I am NOT going to be using Jenkins to push the infra. I suggest you put these two tutorials together. It will also serve as a great memorizing exercise.

Step 1: Create Our Repo with the GitHub CLI.

Assuming you are in the directory if your project and have the GitHub CLI installed, login with:

gh auth login

Then create the repo with your github namespace.

gh repo create scottyfullstack/devops04-immutable-infra

That repo will now be initialized in your project directory.

finally, for our ease of use, let's grab the terraform .gitignore

wget -O .gitignore https://raw.githubusercontent.com/github/gitignore/master/Terraform.gitignore

and then commit and push it up

git add .

git commit -m "initial commit"

git push origin master

Step 2: Terraform RDS

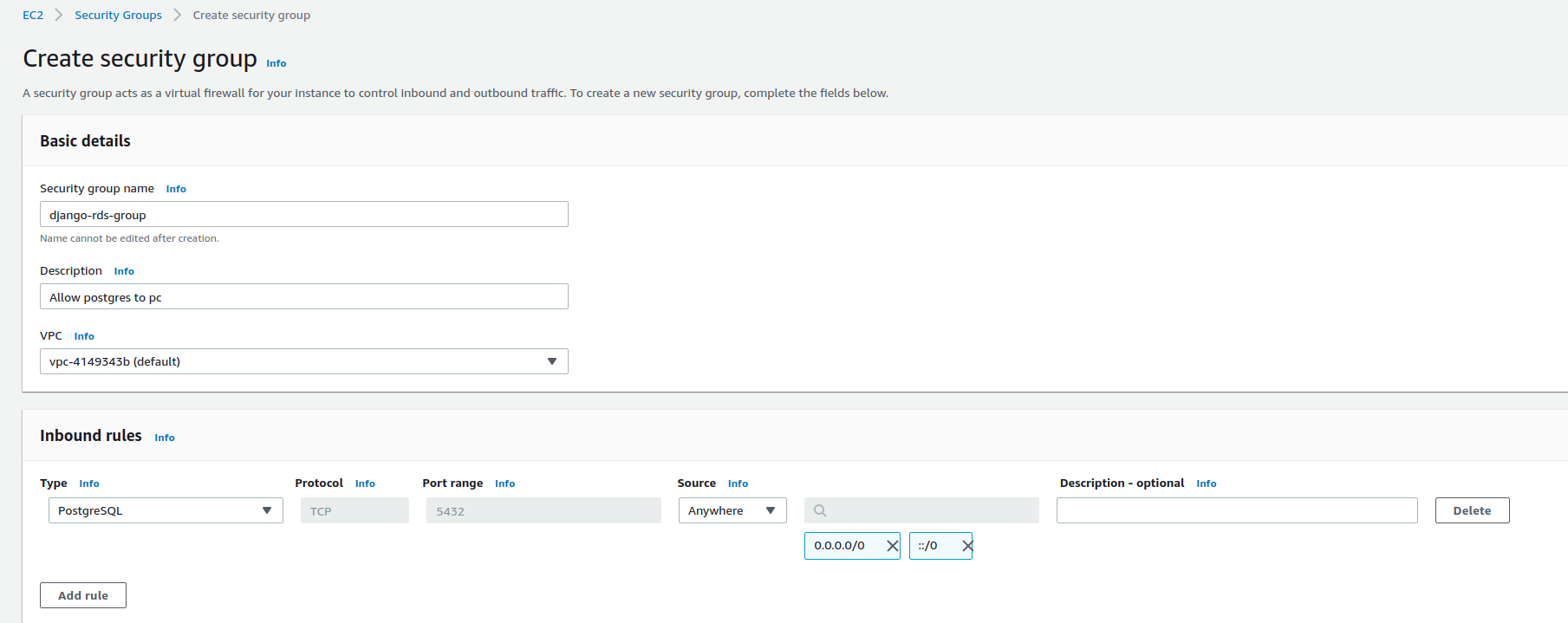

Before we begin, let's add a security group to the EC2 console.

Navigate to the aws console > EC2 > Security Groups > create security group.

Depending on your need here you can customize this. I am just going to allow all on the inbound.

Once it's created grab that id (i.e. sg-f72319ab) and we will use it in our terraform below.

Checkout a new branch

git checkout -b sparlor-RDS-01

For our database, we will be using postgres held in AWS RDS.

mkdir infrastructure

mkdir infrastructure/rds/

cd infrastructure/rds

touch main.tf variables.tf terraform.tfvars

open those files in your editor

in main.tf

provider "aws" {

profile = var.profile

region ="us-east-1"

}

resource "aws_db_instance" "default" {

allocated_storage = 20 #free tier

storage_type = "gp2" #general purpose SSD

engine = "postgres"

instance_class = "db.t2.micro"

name = "contacts_db"

username = "postgres"

password = var.password

identifier = var.id

vpc_security_group_ids = ["sg-f72319ab"]

publicly_accessible = true

}

and in variables.tf

variable "profile" {

description = "Profile to authenticate to AWS"

}

variable "password" {

description = "the password of the database we are creating"

}

variable "id" {

description = "the friendly name you want to provide the RDS instance in AWS"

}

and finally define the vars in the tfvars file (not checked into source control)

profile="scottyfullstack"

password="Peegh7guib8GaevaMa8uv0po"

id="django-rds"

Also, while we are at it, let's create a pull request for our new code:

gh pr create

and since you are likely your own reviewer you can go ahead and merge it to master:

gh pr merge

Then, run the init and apply with your preference variables (plan if you'd like)

Since I am going to be showing some CICD, I dont want to repeat what I did in DevOps Button Click I am going to apply this from the command line. If you'd like to set up a job to apply and destroy these, check that article out.

terraform init

terraform plan -env-file=terraform.tfvars

terraform apply -env-file=terraform.tfvars --auto-approve

Step 3: Kubernetes

Checkout a new branch

git checkout -b sparlor-001-kubernetes

Inside of our infrastructure/ directory, create a new dir called kubernetes and then create new main.tf, variables.tf, and terraform.tfvars files:

cd infrastructure

mkdir kubernetes

cd kubernetes

touch main.tf variables.tf terraform.tfvars

in main.tf we add the following:

- Provider is Kubernetes - DIFFERENT from the RDS AWS instance we made.

- Namespace of "products"

- A secret to connect to the postgres instance created above

- the deployment with our image and secret volume included

- and the service that we are tying to our deployment

provider "kubernetes" {

config_path="~/.kube/config"

}

resource "kubernetes_namespace" "products" {

metadata {

name="products"

}

}

resource "kubernetes_secret" "products_db" {

metadata {

name="postgresdb"

namespace=kubernetes_namespace.products.metadata.0.name

}

data = {

DATABASE_URL=var.database_url

}

type = "Opaque"

}

resource "kubernetes_deployment" "products_deployment" {

metadata {

name="products"

namespace=kubernetes_namespace.products.metadata.0.name

}

spec {

replicas=1

selector {

match_labels = {

app = "products_api"

}

}

template {

metadata {

labels = {

app = "products_api"

}

}

spec {

container {

image = "scottyfullstack/basic-rest-api"

name="products"

port {

container_port = 8000

}

env {

name = "DATABASE_URL"

value_from {

secret_key_ref {

key = "DATABASE_URL"

name = kubernetes_secret.products_db.metadata.0.name

}

}

}

}

volume {

name="${kubernetes_secret.products_db.metadata.0.name}"

secret {

secret_name = "${kubernetes_secret.products_db.metadata.0.name}"

}

}

}

}

}

}

resource "kubernetes_service" "products_svc" {

metadata {

name = "products-svc"

namespace= kubernetes_namespace.products.metadata.0.name

}

spec {

selector = {

app = "${kubernetes_deployment.products_deployment.spec.0.template.0.metadata.0.labels.app}"

}

type = "NodePort"

port {

port = 8000

target_port = 8000

}

}

}

in variables.tf

variable "database_url" {

description = "The connection string to psql rds instance."

}

and finally the terraform.tfvars:

database_url="postgres://postgres:Peegh7guib8GaevaMa8uv0po@node-rds.ca6vr9jjlgch.us-east-1.rds.amazonaws.com:5432/contact_db"

Now all we have to do is fire up a new minikube cluster and apply it (or plan it)

minikube start

terraform plan -var-file=terraform.tfvars

terraform apply -var-file=terraform.tfvars

then assuming the pods are running fine:

minikube service products-svc -n products

Then we can create a PR and merge it since we are the reviewer.

gh pr create

gh pr merge

This concludes this tutorial. There are two more planned for this job listing so check back here for more!

Scotty